Under the broader topic of violence against women, we count gender-based harassment at work. The recent report of a survey based on more than 100.000 persons in the European Union in 2021 shows the shocking amount of violence in the last 12 months as well as violence experienced over a lifetime. (Source Eurostat Link to pdf report) These data have to be interpreted with care since it is a well known statistical phenomenon that in some countries such misbehavior is reported and talked about more easily and openly than in other countries. Therefore, the countries with the highest figures, for example in Nordic countries, there it is safe to talk about the issue (Nordic paradox), whereas in other countries violence against women and sexual harassment at work are still much less talked about and addressed in public. Italy even deviated from the joint EU data collection.

It is important to address the topic in the media and lift the cover-up attempts in many societies. This is a process over time, but it is important to continuously raise awareness about the problem. We have made a lot of progress on more equal treatment of women in recent years in most European societies. However, the is need for a “zero tolerance” of violence against women and the sexual harassment at work, which prevents women to take equal shares across all professions. Monitoring the process is an important step, which is necessary to target safety measures in a better way. Further details of the statistics are needed as well to address intersectionality as well. Young women tend to suffer more than older ones. Maybe the latter ones have learned to be more careful to avoid or evade critical situations. It is, however, men who have to reconsider their behaviour towards women at every age, at work as well as at home.

Water Quality

Obviously, water is not just an issue of quantity, but also quality. The availability of sufficient quantities of water in a region depends on rain, its storage, and the use of these water resources. The quality of water is a subsidiary issue, as lack of inflow causes concentrations of nuisance in water to rise. Global warming will most likely intensity the concern for not only the quantity, but also the quality of water.

Public services are in charge to sample and monitor levels of water quality for consumers. Independent of public, private or public private partnerships in this field there is a need to check from time to time the quality of water. Public institutions do a great job in monitoring water quality, but as science progresses there are new sources of pollution that enter the already complex analysis of water quality. New chemicals and remains of medical or pharmaceutical analyses have been retrieved from water and, sometimes, they have reached critical or unhealthy levels.

More detailed monitoring is necessary and new digital tools allow to improve just this type of monitoring to inform policy makers on shifting patterns.

A project of that type “Urban Green Eye”, for example, allows to monitor the artificialization of previous vegetation to show up on satellite images of Germany. Independent groups, citizens or communities might find it useful to use their own sampling and testing to guard against abuses or dysfunctional public monitoring systems. Start-ups like “Hydroguard” offer services to support activists, communities or public services in their own efforts to ensure water quality.

Patient Empowerment

The empowerment of patients is a well-established practice in the treatment of diabetes. Measuring your own blood sugar and adjusting your medication to the self-monitored data is common practice. For patients with high blood pressure this patient empowerment is less prevalent. A medical study carried out in Valencia (Spain) by Martínez-Ibáñez et al. (2024) has tested the effects of such a self-monitoring and self-medication experiment.

The results publishes in (JAMA) gave rise to considerable attention in the profession as the empowerment of patients is one way out of the likely increasing shortage of medical professionals in aging societies. Whereas other studies found that total costs to the medical system might increase, the study in Spain provides evidence of the cost-reduction effect of such an empowerment. 24 months after the beginning of the trial. After the establishment of a “medication based on an individualized prearranged plan used in primary care” the self-administering participants achieved a significant decrease in their blood pressure that lasted until the end of the study after 2 years. The drop-outs of the study seem to follow a random pattern.

The conclusion gives support to the potential of patient empowerment in the widespread treatment of higher blood pressure beyond the regular visits of medical doctors. The monitoring of changes in lifestyle add to this to keep the costs of health care under control in aging societies.

AI and PS

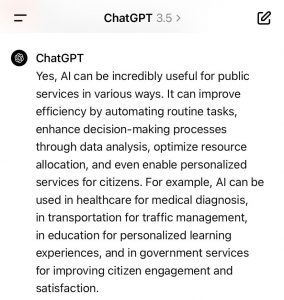

AI like in ChatGPT is guided by so-called prompts. After the entry of “what is AI” the machine returns a definition of itself. If you continue the chat with ChatGPT and enter: “Is it useful for public services” (PS), you receive an opinion of AI on its own usefulness (of course positive) and some examples in which AI in the public services have a good potential to improve the state of affairs.  The AI ChatGPT is advocating AI for the PS for mainly 4 reasons: (1) efficiency purposes; (2) personalisation of services; (3) citizen engagement; (4) citizen satisfaction. (See image below). The perspective of employees of the public services is not really part of the answer by ChatGPT. This is a more ambiguous part of the answer and would probably need more space and additional explicit prompts to solicit an explicit answer on the issue. With all the know issues of concern of AI like gender bias or biased data as input, the introduction of AI in public services has to be accompanied by a thorough monitoring process. The legal limits to applications of AI are more severe in public services as the production of official documents is subject to additional security concerns.

The AI ChatGPT is advocating AI for the PS for mainly 4 reasons: (1) efficiency purposes; (2) personalisation of services; (3) citizen engagement; (4) citizen satisfaction. (See image below). The perspective of employees of the public services is not really part of the answer by ChatGPT. This is a more ambiguous part of the answer and would probably need more space and additional explicit prompts to solicit an explicit answer on the issue. With all the know issues of concern of AI like gender bias or biased data as input, the introduction of AI in public services has to be accompanied by a thorough monitoring process. The legal limits to applications of AI are more severe in public services as the production of official documents is subject to additional security concerns.

This does certainly not preclude the use of AI in PS, but it requires more ample and rigorous testing of AI-applications in the PS. Such testing frameworks are still in development even in informatics as the sources of bias a manifold and sometimes tricky to detect even for experts in the field. Prior training with specific data sets (for example of thousands of possible prompts) has to be performed or sets of images for testing adapted to avoid bias. The task is big, but step by step building and testing promise useful results. It remains a challenge to find the right balance between the risks and the potentials of AI in PS.