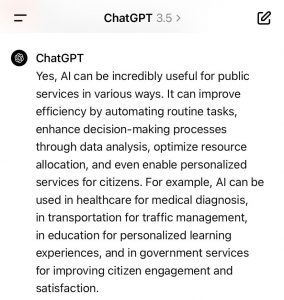

AI like in ChatGPT is guided by so-called prompts. After the entry of “what is AI” the machine returns a definition of itself. If you continue the chat with ChatGPT and enter: “Is it useful for public services” (PS), you receive an opinion of AI on its own usefulness (of course positive) and some examples in which AI in the public services have a good potential to improve the state of affairs.  The AI ChatGPT is advocating AI for the PS for mainly 4 reasons: (1) efficiency purposes; (2) personalisation of services; (3) citizen engagement; (4) citizen satisfaction. (See image below). The perspective of employees of the public services is not really part of the answer by ChatGPT. This is a more ambiguous part of the answer and would probably need more space and additional explicit prompts to solicit an explicit answer on the issue. With all the know issues of concern of AI like gender bias or biased data as input, the introduction of AI in public services has to be accompanied by a thorough monitoring process. The legal limits to applications of AI are more severe in public services as the production of official documents is subject to additional security concerns.

The AI ChatGPT is advocating AI for the PS for mainly 4 reasons: (1) efficiency purposes; (2) personalisation of services; (3) citizen engagement; (4) citizen satisfaction. (See image below). The perspective of employees of the public services is not really part of the answer by ChatGPT. This is a more ambiguous part of the answer and would probably need more space and additional explicit prompts to solicit an explicit answer on the issue. With all the know issues of concern of AI like gender bias or biased data as input, the introduction of AI in public services has to be accompanied by a thorough monitoring process. The legal limits to applications of AI are more severe in public services as the production of official documents is subject to additional security concerns.

This does certainly not preclude the use of AI in PS, but it requires more ample and rigorous testing of AI-applications in the PS. Such testing frameworks are still in development even in informatics as the sources of bias a manifold and sometimes tricky to detect even for experts in the field. Prior training with specific data sets (for example of thousands of possible prompts) has to be performed or sets of images for testing adapted to avoid bias. The task is big, but step by step building and testing promise useful results. It remains a challenge to find the right balance between the risks and the potentials of AI in PS.