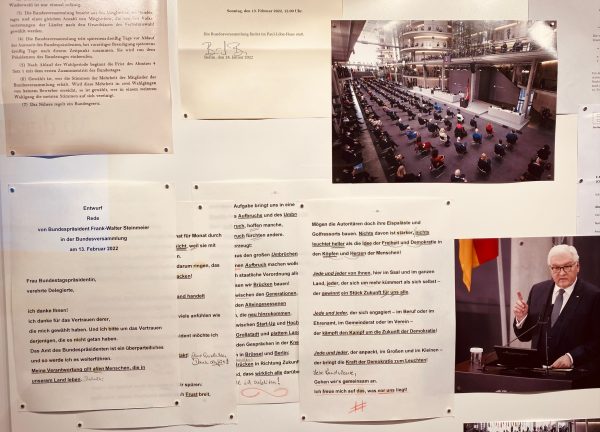

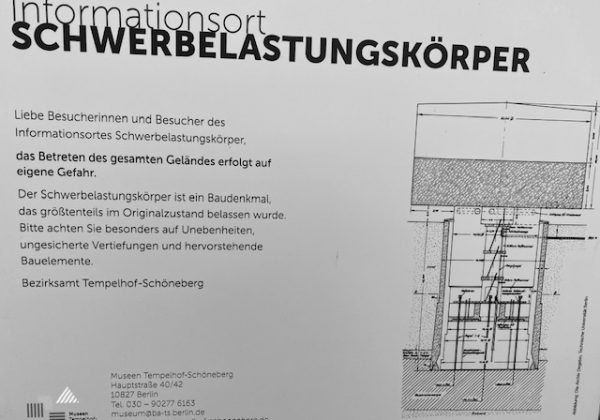

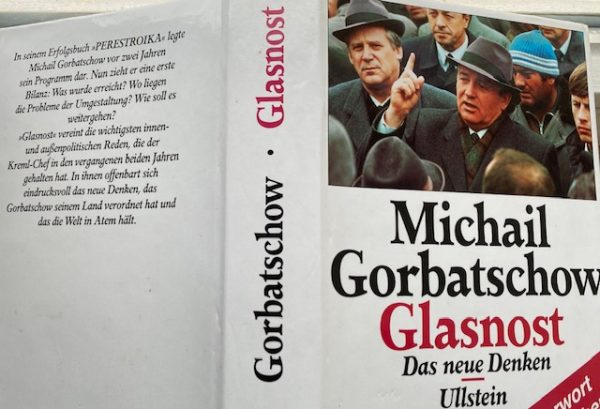

In a time perspective of at least since the German unification of 1990, we have to reconsider the perception of what constitutes an external versus an internal threat. Prior to the implosion of the Soviet Union and the Glasnost years, the external Russian threat has been transformed across the globe into more hybrid threats coming from internal forces which have been “instrumentalized” by external forces. In an essay by Valentine Faure (Le Monde 2026-2-21) this twist to internal politics has been described as a form of new forms of interference of external forces into European internal political, economic and social affairs. In fact the basic strategy is as old as the famous Trojan horse, but the strategy has been refined and to work over much longer time spans as well as in other scientific applications. Any form of powerful, interested party would rather use the soft power of persuasion than brutal force to reach political objectives. Corruption and buying votes, directly or indirectly, has become a legitimate way in this hybrid or open use of power from the inside of a society than through a more traditional external affairs strategy. The confrontation of the bipolar world made it easy to put emphasis on external military power. A multilateral as well as more multilayered international political arena precludes to some extent the bipolar confrontation as conflicts on several frontiers increases risks exponentially. In search of other strategies it seems plausible to turn to hybrid as well as disguised external force. Europe and democratic systems in general are more vulnerable than autocratic states, because the belief in an open form of society is part of its DNA. Open societies shall have to sharpen their sensory systems to transformed external to internal threats.