We start to analyze the impact of AI on our behavior. It is an important question to be aware of not only how we interact with AI (Link), but also what effect the use of AI (disclosed or not) will have on our social behavior. Knowing that AI is used might change our willingness to cooperate or increase or decrease pro-social behavior. The use of AI in form of an algorithm to select job candidates might introduce a specific bias, but it can equally be constructed to favour certain criteria in the selection of candidates. The choice of criteria becomes more important in this process and the process of choosing those criteria.

Next comes the question whether the announcement includes as information that AI will be used in the selection process. This can be interpreted by some that a “more objective” procedure might be applied, whereas other persons interpret this signal as bad sign of an anonymous process and lack of compassion prevalent in the organization focused mostly on efficiency of procedures. Fabian Dvorak, Regina Stumpf et al. (2024) demonstrate with experimental evidence from various forms of games (prisoner’s dilemma, binary trust game, ultimatum game) that a a whole range of outcomes is negatively affected (trust, cooperation, coordination and fairness). This has serious consequences for society. The social fabric might worsen if AI is widely applied. Even or particularly the undisclosed use of AI already shows up as a lack of trust in the majority of persons in these experiments.

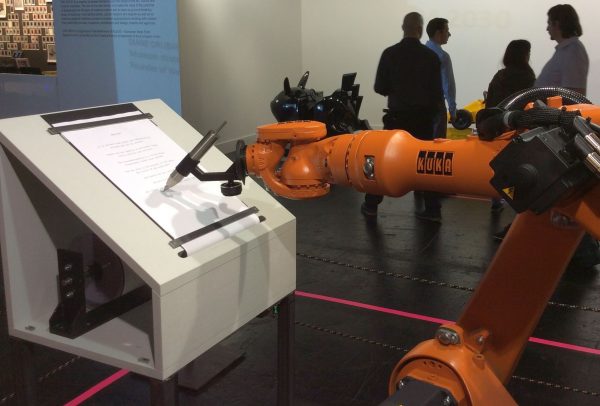

In sum, we are likely to change our behavior if we suspect AI is involved the selection process or content creation. This should be a serious warning to all sorts of content producing media, science, public and private organizations. It feels a bit like with microplastic or PFAS. At the beginning we did not take it seriously and then before long AI is likely to be everywhere without us knowing or aware of the use. (Image taken on Frankfurt book fair 2017-10!)