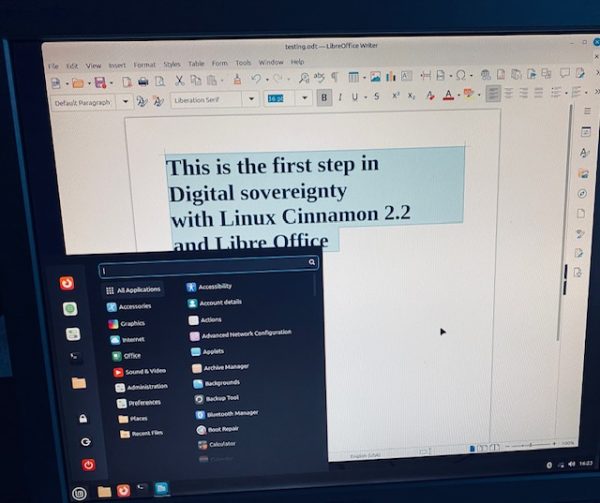

We get accustomed to our digital environment through the routine use of applications without even thinking about it. Over years of just using only one browser or office package, we forgot to make conscious choices in companies and our private computers. However, digital sovereignty asks us to take back our control of these computer systems before they take control of us. The AI-boom will make a lot of things easier for us, without knowing much about the technology behind them. Just talking to your smartphone you will be able to achieve many tasks or searches without many of the other steps that were necessary before. The knowledge to command a typewriter or keyboard will be more and more obsolete unless you really need to change something profound on your computer, like the operating system or an expensive office program. Keep exercising yourself with alternative and new software and you’ll stay the master of your digital environment.